The above video shows a working prototype which allows users to identify by simply putting down their hands on the surface. This is related to a topic I became recently more and more interested in: the topic of multiuser-awareness, or user identification respectively discrimination, for interactive surfaces. Of course, there is DiamondTouch which can distinguish up to four users and has been widely used in surface research. However, while being a very useful platform, this technology does have its limitations. It is rather costly and requires users to be in steady contact with a receiver, i.e. they cannot arbitrarily move around the table. Also, it is limited to front-projected screen output and does not come in larger sizes.

Many surface researchers use vision-based systems, such as Microsoft Surface, and hence have do their research without user identification at hand. In this context, it is interesting to observe that the application of user identification does not seem to be guided by requirements; in fact, it seems to be mostly governed by the availability of technology. This raises questions about enabling technologies. Which input technologies can be used to identify users? What are their advantages, what their limitations? How do they influence the potential of multiuser-aware interaction techniques?

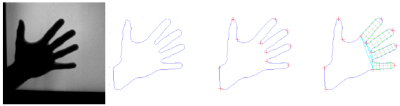

There are several approaches out there which bring some sort of user identification to surfaces. For example, styluses or other ID carrying devices, such as PDA or mobile phones (see the PhoneTouch project for further details), can be used. Another example is to employ external trackers [2] or use biometric features. One could imagine a surface which is basically a very large fingerprint reader [3] using FTIR, either realized by a ultrahigh resolution camera or more likely a steerable camera. In this context, I have been starting to explore the applicability of hand contour or silhouette information for identification as it is easier to acquire and still provides a sufficient degree of user discrimination power.

A very exciting dimension of multuser-aware interfaces is of course the space of possible interaction techniques. In this context, iDwidgets [3] gives an excellent idea of what is made possible having user identification for widgets, ranging from supporting modal input sequences to semantic user interface elements to interesting approaches for group input, such as voting.In the future, I hope to be able to further explore this field, build some user identification enabling input devices, test their advantages and limitations, and develop and evaluate multiuser-aware interaction techniques.

References

[1] Partridge, G. A., and Irani, P. P. IdenTTop: A exible platform for exploring identity-enabled surfaces. In Proc. CHI EA 2009.

[2] Sugiura, A., and Koseki, Y. A user interface using fingerprint recognition: Holding commands and data objects on fingers. In Proc. UIST 1998.

[3] Ryall, K., et al. iDwidgets: parameterizing widgets by user identity, In Proc. Interact 2005.

Related Publications

- Schmidt, D.

Towards personalized surface computing

UIST 2010 Doctoral Symposium

PDF | Poster - Schmidt, D., Chong, M., and Gellersen, H.

IdLenses: Dynamic Personal Areas on Shared Surfaces

In Proceedings of ITS 2010, pp. 131-134

PDF | YouTube | Video - Schmidt, D., Chong, M., and Gellersen, H.

HandsDown: Hand-contour-based user identification for interactive surfaces

In Proceedings of NordiCHI 2010, pp. 432-441

PDF | YouTube - Schmidt, D.

Know thy toucher

In CHI 2009 Workshop: Multitouch and Surface Computing

PDF