Biometric User Identification for Multi-touch Surfaces Based on Hand Contours

HandsDown is a novel technique for user identification on interactive surfaces. It enables users to access personal data on a shared surface, to associate objects with their identity, and to fluidly customize appearance, content, or functionality of the user interface. To identify, users put down their hand flat on the surface. HandsDown is based on hand contour analysis; neither user instrumentation nor external devices are required for identification.

Demo video of using HandsDown to access an online photo library (such as Picasa or Flickr):

We use a camera-based multi-touch surface to extract characteristic features of the hand and Support Vector Machines (SVM) to register and identify users. Multi-touch finger interactions co-exist with hand-based identification. We tested our system with 17 users; the literature suggests that hand contours can be used robustly for groups of about 500 users [1].

IdLenses: Dynamic Personal Areas on Shared Surfaces

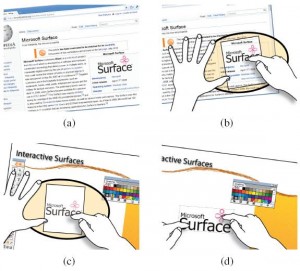

IdLenses is a novel interaction concept to realize user-aware interfaces on shared surfaces. Users summon virtual lenses which allow for personalized input and output. The ability to create a lens instantaneously anywhere on the surface, and to move it around freely, enables users to fluidly control which part of their input is identifiable, and which shall remain anonymous. In this paper, we introduce the IdLenses concept and its interaction characteristics.

For example, a clipboard function allows users to maintain a personal clipboard and hence work independently on a shared surface. The IdLens is invoked over an object the user intends to copy. By selecting it through the lens the object is put into the user’s clipboard. To insert the object again, the user invokes the IdLens (at a different location) to paste it. This and three further example scenarios are illustrated in the following video:

References

[1] E. Yörük, E. Konukoglu, B. Sankur, and J. Darbon. Shape-based hand recognition. Image Processing, IEEE Transactions on, 15(7):1803–1815, 2006.

Related Publications

- Schmidt, D., Chong, M., and Gellersen, H.

IdLenses: Dynamic Personal Areas on Shared Surfaces

In Proceedings of ITS 2010, pp. 131-134

PDF | YouTube | Video - Schmidt, D., Chong, M., and Gellersen, H.

HandsDown: Hand-contour-based user identification for interactive surfaces

In Proceedings of NordiCHI 2010, pp. 432-441

PDF | YouTube

One thought on “HandsDown and IdLenses”

Comments are closed.